Survivorship Bias in Curriculum Design

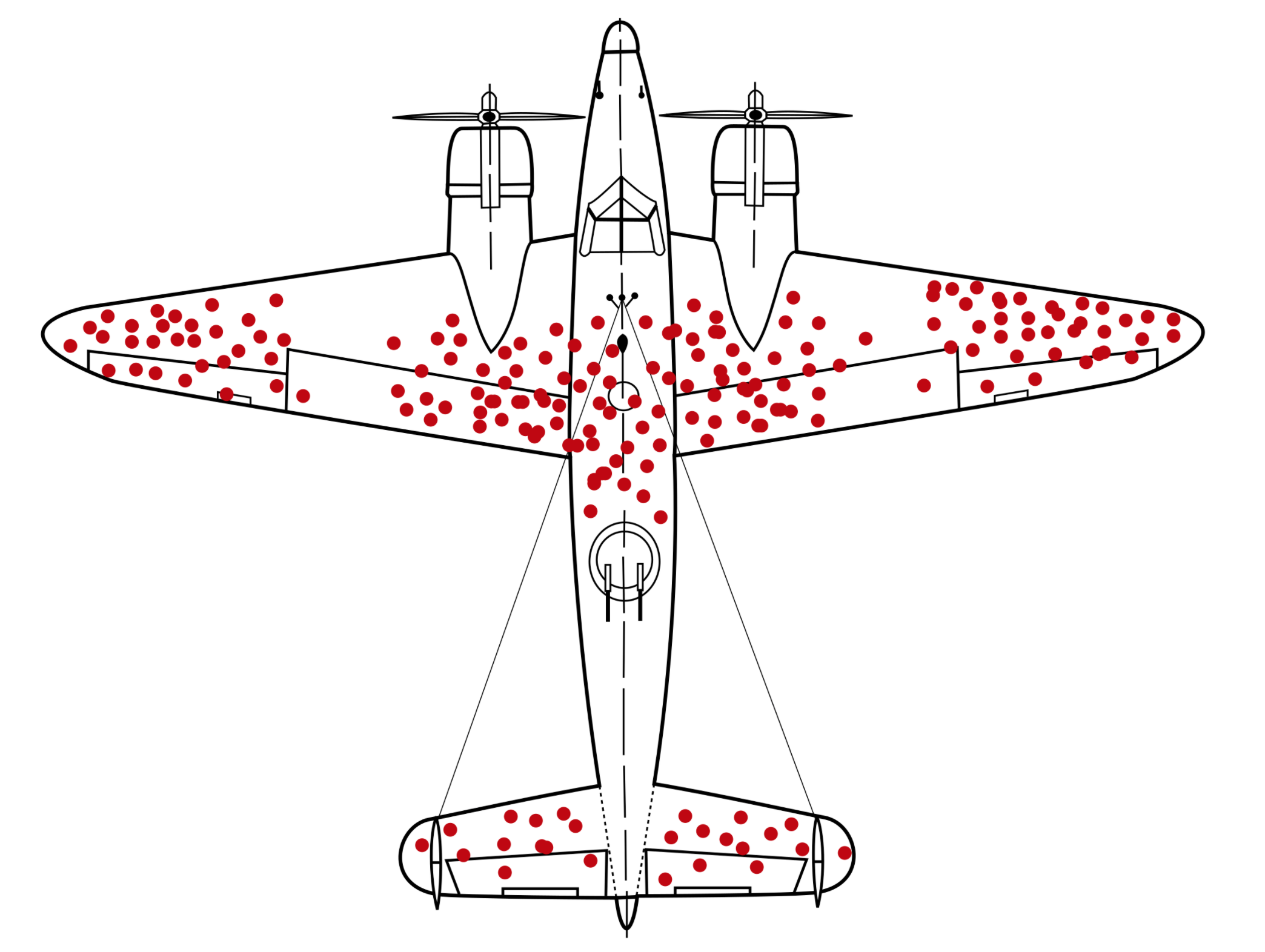

There is an extraordinary image of a fighter plane marked up with red dots. The image was produced by the US military because they wanted to protect their planes better. The idea was to map out where planes got shot and then to selectively armour those parts of the plane.

Red dots on a plan of a military plane, indicating the location of bullet holes after missions. Credit: Martin Grandjean, McGeddon, and Cameron Moll. Image available at https://en.wikipedia.org/wiki/Survivorship_bias#/media/File:Survivorship-bias.svg

But what the image shows is the places where the planes which returned were shot. A shot through the engine or the cockpit seems more likely to cause a crash than one through the wing. The red dots are not the places needing armour; they’re the places which don’t.

The misinterpretation here is often referred to as ‘survivorship bias’. In general, survivorship bias occurs whenever the sample systematically ignores the cases which didn’t make it. The planes shot through the engine should be dots on this figure, but those planes weren’t sampled because they crashed.

Survivorship bias occurs a lot in curriculum decisions in HE Chemistry. This blog is a reflection on some of the ways it happens and some of the ways it can be problematic.

In general terms, I am aiming to point out how the people making curriculum decisions are not representative - the selection of people into such roles is a bias which systematically excludes certain people and perspectives just like the bullet mapping ignored certain planes. I am not thinking about any specific university, but rather my broad experiences and discussions about general features of UK HE curriculum development in chemistry.

Content and Coverage

Traditional content in a chemistry degree is what served current staff well. They found it stimulating and useful; it supported them to become who they became.

This is a survivorship bias because it doesn’t consider the people who didn’t reach faculty positions. Did the content of the degree thrill the worst-performing student in the class of 1990? Did it appeal to the kid who had to give up on HE to support their family after A Levels? Did it serve their O Level friends who left school at 16?

These considerations are important because the student experience of staff was not representative then, and has become even less representative since. Any decision based on that experience will code survivorship bias into curriculum decisions.

“Every student should know the Thorpe-Ziegler reaction.” “I didn’t think I’d need to know about radicals but then I did a Postdoc in photophysics where I found it really useful.” “It’s important that students can prove that the inverse of a conjugate matrix is equal to the conjugate of its inverse if they are really going to understand quantum.” These are the kinds of statements which seem perilous to me because curriculum decisions like this seem capable of alienating students who don’t (already) look like today’s curriculum designers. It’s a good thing to enjoy what you studied, but students might want something different (e.g. an understanding of how new materials could benefit society, a module on climate change).

Volume of Academic Work

Student workload is notoriously hard to quantify, but it’s big for chemistry degrees. The contact hours are large (perhaps in the region of 25h/week) and this leaves a narrower margin for independent study than subjects with lower contact. Workload volume will be lower for students well-prepared for the degree (e.g. by a further maths A Level or parents communicating what HE is about), and students will have different access to maximum workloads (e.g. a student with a part-time job or a chronic illness might not be able to do academic work for 60h/week).

So how do we consider student workload? When we add a pre-lab or write a difficult exam question, when do we say ‘actually that’s too much’?

The intuitive way is to consider our own experiences as a student. “It never did me any harm.” “First year is hard, but it’s about learning how to manage your time.” “The lectures are a good starting point, but it’s probably best to read Chapter 7 of Shriver as well.” “I was maybe a bit quicker than my peers, but I had plenty of time to play cricket in the summer.”

All of these self-expectations can risk engendering survivorship bias when used to decide things about other people. Academic staff typically survived their degrees in a relatively benign atmosphere of elite access to HE and generous student funding for study. They didn’t drop out (I sometimes wonder what the chemistry dropouts of 1980 would say about workload if you asked) and they didn’t come through a school system squeezed by post-2008 austerity or post-2020 COVID or even the current form of the RSC lab hours requirement.

There are people who seem to ‘get it’ without needing to hit the books, but what about the student who struggles with a concept and needs to spend an extra ten hours reading Atkins? Are both of these experiences in the histories of staff designing a curriculum? When we are forced to make a pragmatic workload policy for the ‘average’ student, who decides what ‘average’ means?

Didactic Teaching and Problem Solving Assessments

Chemistry is a body of knowledge which often gets represented as a bundle of facts, e.g. in syllabus documents which describe content. While deliberate use of the traditional lecture can promote active learning, the dominant mode of HE instruction in Chemistry is a didactic lecture where staff talk and students take notes.

This sits in contrast with assessment strategies built around problem solving. It is common - perhaps even normal - to teach students about the subject without teaching them how to solve problems. Misaligned teaching and assessment is fine if you happen to ‘get it’, but it’s terrible if you don’t. If you survive that system, you might not see the inequity in misaligned teaching because you succeeded anyway.

Constructive alignment of teaching and assessment is a rare example of a strategy to mitigate survivorship bias. By deliberately considering how teaching and assessing interrelate, it is possible to assess students on what they can be objectively expected to grasp.

Mitigating Circumstances Procedures/Inclusivity

Current staff survived a system which was fundamentally inhospitable to people with (now-) legally-protected characteristics. The Equality Act was brought into law in 2010, and therefore did not underpin the education of most academics. Reasonable adjustments for education are - on the academic timescale and in their current form - a new thing.

Survivorship bias analysis forces us to ask ‘who didn’t make it in the pre-2010 regime?’ The systems and procedures appropriate to the old assumption of students being fundamentally fit and healthy (and maybe fundamentally white and male) are now often inappropriate as a matter of law. Discriminatory structures were not generally holding back the people who later became academics, constructing a survivorship bias on an EDI basis.

Have we internalised equitable thinking enough so that it shines through our curriculum decisions? It seems that the similarities between pre-2010 courses and post-2010 courses are high; perhaps the exclusionary dimensions of our current degrees mirror those of our past degrees.

In my view, this problem might be particularly acute when combined with the student workload analysis. The self-advocacy required to gain access to educational equity as a disabled student takes time; if time is already tight, that’s a real issue.

Conclusion: EDI and Rigour

It’s a good thing to want to share a rigorous and stimulating curriculum with students, but we have to think really carefully about who students are. They aren’t junior versions of current staff, and a curriculum which doesn’t face this fact unflinchingly will always favour those (few) students who do resemble curriculum designers.

These conversations place us in uncomfortable spaces where we are forced to confront the questions survivorship let us ignore. Which pieces of our training were necessarily hard, and which were arbitrarily hard? Which pieces of our workload were needed, and which were just nice to see? What styles of assessment were a fair test of our abilities, and what styles were actually a test of something else?

The heart of the question is this: what are the necessary (‘no frills’) features of a superb chemist? (Perhaps the question the US military were really asking was: what are the necessary features of a superb plane?) I worry that unless we focus on the bare minimum requirements for excellence, the pressure will always be to design courses which are exclusionary by adding unnecessary requirements, just like adding armour to the parts of a plane which don’t need it. There is a useful opportunity here to use the problem of survivorship bias to explore the essence of excellent graduates (perhaps even exploring with students what this means in 2021).

This will certainly require a thorough grounding in facts and principles, but perhaps not every student needs to know the Thorpe-Ziegler reaction. It will certainly demand many hours doing academic work, but perhaps not sixty hours per week. It will certainly require expert problem solving, but perhaps on material students have been given clear direction to master.

A clearer vision for excellence will help us understand how to support all our students to reach it. This will have particularly powerful effects on the inclusivity of our chemistry degrees, with knock-on effects for the accessibility of our profession. In my view, this is worth the discomfort we must endure by confronting the ways that we survived an unfair system: how bullets passed through our wings rather than our engines.